US researchers make Michelangelo's David dance to the beat to study creating photorealistic videos

If, while visiting the Accademia Gallery in Florence, you have wondered, among other things, how Michelangelo ’s David would behave if it were to start dancing to the notes of What is love by Haddaway (or to any dance music track), we can say that your curiosity is now satisfied. A group of researchers from the NVIDIA Corporation (a U.S. company that manufactures famous graphics processors, video cards and computer multimedia products) has animated the Michelangelo masterpiece by making it dance: the authors of the operation are Ting-Chun Wang, Ming-Yu Liu, Andrew Tao, Guilin Liu, Jan Kautz and Bryan Catanzaro.

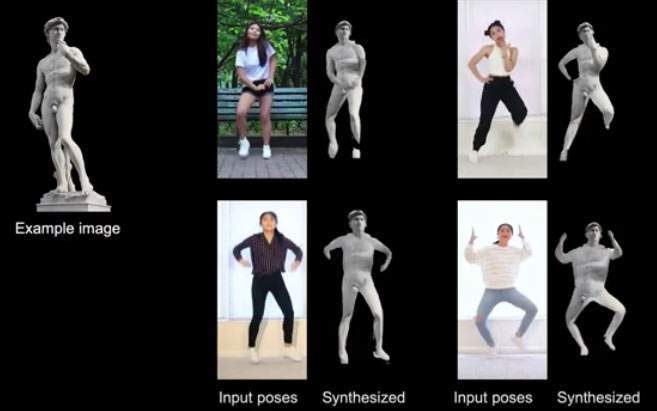

Actually, it’s not that the six researchers didn’t have better things to do: their dancing David was used to test a video-to-video synthesis program that applies the mimicry of other flesh-and-blood humans to humans and objects (statues, in the case of the David). “The ’video-to-video (vid2vid)’ synthesis,” reads the researchers’ paper summary, “aims to convert a semantic video input, for example a video of human movements or segmentation masks, into a photorealistic video.” Not only that: the study also aims to overcome, the researchers explain, two heavy limitations of vid2vid synthesis, namely, the fact that such operations require a huge amount of data in order to guarantee acceptable results, and the fact that a vid2vid model has limited generalization capabilities (i.e., a vid2vid model can only be effective if applied to the person who posed, precisely, to lend itself to modeling: in other words, a vid2vid model cannot be used to create videos of other people).

The work of researchers at the NVIDIA Corporation learns how to synthesize certain inputs and then transmit them to subjects other than those who have “posed” to serve as models for the program: the David moving on the dancefloor is the result of this important research in the area of creating realistic videos. The same thing was done with photos of people who lent themselves to the test: given two inputs (a video of a dancing figure and photographs of the people to be animated), video-realistic videos were produced of the subjects portrayed in the photographs, who found themselves (despite themselves, we might say) dancing to disco music. The studio and a video with test animations can be found at this link.

In a nutshell, the studies are trying to evolve the state of the art on applications that create photorealistic images and videos, and yet give a lot of headaches especially if we think about the problems regarding privacy and deep fakes, i.e., realistic (but fake) images made from the photograph of a real person (there are already, for example, applications that literally “undress” a person simply by feeding them a photograph). Videos like the one of the dancing David could be the next (and, come to think of it, somewhat disturbing) evolution.

|

| US researchers make Michelangelo's David dance to the beat to study creating photorealistic videos |

Warning: the translation into English of the original Italian article was created using automatic tools. We undertake to review all articles, but we do not guarantee the total absence of inaccuracies in the translation due to the program. You can find the original by clicking on the ITA button. If you find any mistake,please contact us.